Google DeepMind Introduces SynthID: A Move Towards Transparent Watermarking in AI-Generated Art

In an effort to enhance transparency surrounding AI-generated images, Google DeepMind unveiled SynthID, a groundbreaking digital watermarking and identification tool specifically designed for generative art creations. Contrary to traditional watermarks, this one remains imperceptible to the human eye, seamlessly integrating into the image’s pixels. As part of its initial phase, SynthID is being introduced to select users of Imagen, which belongs to Google’s array of cloud-based AI tools.

Generative art, though revolutionary, does come with its share of challenges, the most significant being the capability to produce deepfakes. A humorous example that gained significant traction was the AI-crafted image of the pope donned in hip-hop gear, made using MidJourney. Such instances underscore the potential and risks tied to the advancement of generative tools. The deeper implications lie in the potential misuse of AI art in sensitive areas like political campaigns, where the impact could be far-reaching and detrimental. In response to such concerns, a consensus emerged post a White House meeting in July, where seven AI giants concurred on the development of watermarking tools for AI-generated content, ensuring clarity regarding its origin. Leading the pact, Google has emerged as the pioneer in introducing such a system.

While Google remains guarded about the intricate technical details of SynthID (a precautionary step against potential evasions), it assures users that the watermark is resilient to basic edits. Striking a balance between making a watermark inconspicuous and ensuring its endurance against image alterations poses a significant challenge. DeepMind’s announcement on SynthID emphasized its design precision, ensuring that the watermark remains discernible even post common alterations like filters, color shifts, and compressions. Sven Gowal and Pushmeet Kohli, the leaders behind the SynthID project at DeepMind, highlighted the tool’s commitment to maintaining image quality while ensuring watermark integrity.

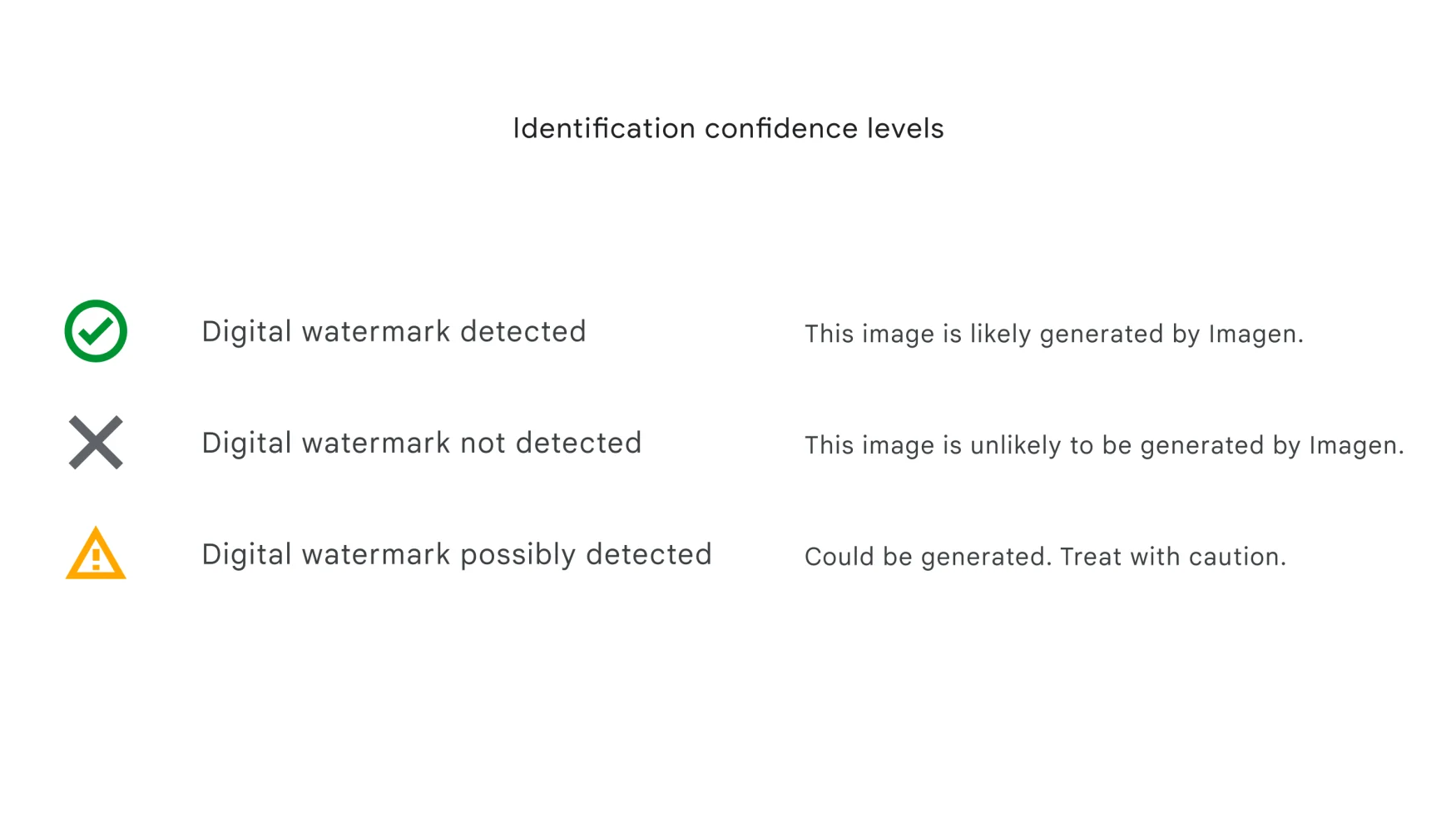

In an ongoing effort to enhance transparency and authentication in AI-generated imagery, Google DeepMind has further detailed the workings of its SynthID tool. Diving deeper, the tool categorizes watermarked images under three distinct digital watermark confidence levels, namely: ‘detected’, ‘not detected’, and ‘possibly detected’. Given its pixel-based integration, Google assures that SynthID can work harmoniously with metadata-driven methods, mirroring Adobe’s approach in its Photoshop generative functionalities, currently accessible in an open beta stage.

The architecture of SynthID integrates a duo of deep learning frameworks. The first model aims at the actual watermarking, while the latter is dedicated to watermark detection. This double-model approach, as detailed by Google, underwent training on a wide spectrum of images, ultimately leading to a cohesive ML model. Elaborating further, DeepMind’s Sven Gowal and Pushmeet Kohli elucidated, “This holistic model has been honed to achieve multiple targets, ranging from the precise identification of watermarked content to refining the watermark’s invisibility by ensuring visual congruence with the original content.”

However, Google doesn’t present SynthID as a one-size-fits-all solution. While it champions the watermark’s technical robustness in promoting responsible usage of AI-generated content, it also admits to the system’s vulnerability against severe image tampering. The tech giant envisions this watermarking system as a potential extension across varied AI models, encapsulating domains like text generation (as seen in ChatGPT), video, and audio content.

Despite the promising prospects of watermarking in curbing deepfake threats, the real-world application could escalate into a cat-and-mouse game between digital watermarking tools and hackers. Consistent updates might become imperative for services incorporating SynthID to stay ahead. Furthermore, the open-source DNA of tools like Stable Diffusion poses another challenge. With its vast availability in multiple custom builds operable on local PCs, rallying for an industry-wide acceptance of SynthID or any analogous tool might be ambitious. Yet, with a forward-looking perspective, Google is keen on making SynthID available to external parties soon, nurturing a more transparent AI landscape across the board.