Qualcomm has a well-established history of running artificial intelligence and machine learning systems directly on devices, without relying on an internet connection. This capability has been a hallmark of their camera chipsets for many years. However, during the Snapdragon Summit 2023 held on Tuesday, the company made a groundbreaking announcement that on-device AI is now making its way to mobile devices and Windows 11 PCs, courtesy of their new Snapdragon 8 Gen 3 and X Elite chips.

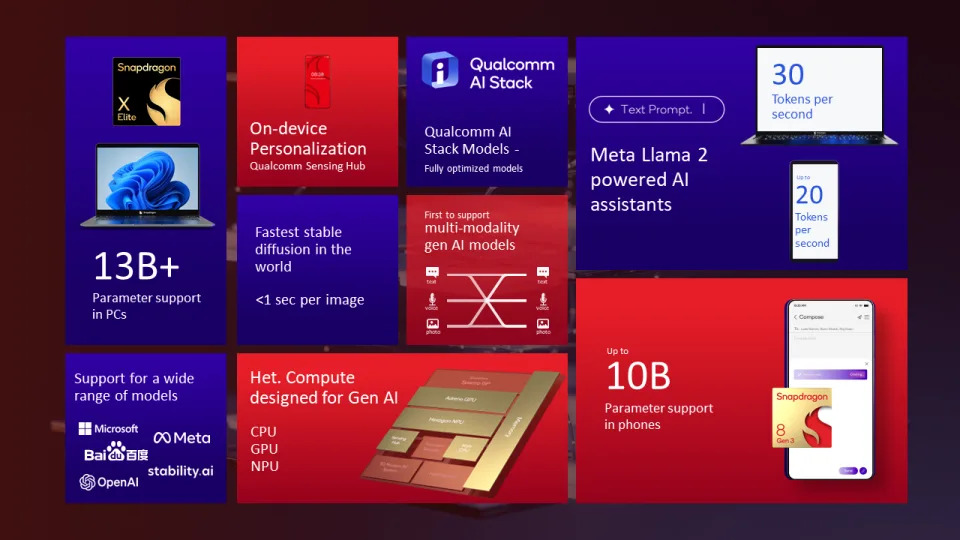

Both of these chipsets were meticulously designed with generative AI capabilities in mind. They have the capacity to accommodate a diverse range of large language models (LLM), language vision models (LVM), and transformer network-based automatic speech recognition (ASR) models. The Snapdragon 8 Gen 3 can handle models with up to 10 billion parameters, while the X Elite can manage even larger models, with up to 13 billion parameters, all directly on the device. This groundbreaking advancement enables users to utilize applications like Baidu’s ERNIE 3.5, OpenAI’s Whisper, Meta’s Llama 2, or Google’s Gecko on their mobile devices and laptops without the need for an internet connection. Qualcomm’s chips are meticulously optimized for processing voice, text, and image inputs.

Durga Malladi, Senior Vice President & General Manager of Technology Planning & Edge Solutions at Qualcomm, emphasized the significance of having a robust foundation to support these models. To achieve this, heterogeneous compute capabilities are crucial. Qualcomm’s Snapdragon 8 Gen 3 and X Elite chips incorporate state-of-the-art CPU, GPU, and NPU (Neural Processing Unit) processors, all working simultaneously. This enables them to efficiently handle multiple models running concurrently. The Qualcomm AI Engine comprises the Oryon CPU, the Adreno GPU, and the Hexagon NPU, collectively capable of delivering up to 45 TOPS (trillions of operations per second). These chips can process 30 tokens per second on laptops and 20 tokens per second on mobile devices, with tokens being the fundamental text/data units that LLMs operate on. For their memory allocation, these chipsets make use of Samsung’s 4.8GHz LP-DDR5x DRAM.

“Generative AI has proven its ability to tackle highly complex tasks efficiently,” he elaborated. The potential applications are diverse, ranging from meeting and document summarization or drafting emails for consumers to generating prompt-based computer code or music for enterprise use, as highlighted by Malladi.

Alternatively, it can be harnessed for creating visually appealing images. Qualcomm is integrating its previous work with edge AI, known as Cognitive ISP. Devices utilizing these chipsets will have the capability to perform real-time photo editing with up to 12 layers, enhance low-light image capture, eliminate unwanted objects from photos (akin to Google’s Magic Eraser), and expand image backgrounds. Users can even watermark their photos to affirm their authenticity as non-AI generated, utilizing Truepic photo capture.

Shifting the primary residence of AI from the cloud to your phone or mobile device, instead of relying on remote servers, offers users a multitude of advantages over the current system. Similar to enterprise AI models that fine-tune general models using a company’s internal data for more precise and relevant responses, a locally-stored AI gradually becomes personalized over time. Malladi explained, “The assistant gets smarter and more proficient when operating solely on the device itself.”

Furthermore, the inherent delay associated with cloud-based queries is eliminated when all assets are stored locally. Consequently, both the X Elite and SD8 Gen 3 are not only capable of running Stable Diffusion models on-device but can generate images in less than 0.6 seconds.

The ability to run more extensive and capable models and communicate with them using spoken language instead of typing could be the most significant benefit for consumers. Malladi believes that this approach could revolutionize the way we interact with devices, making voice a more natural and intuitive interface. He stated, “We believe it has the potential to be a transformative moment, changing the way we engage with devices.”

While mobile devices and PCs mark the initial phase of Qualcomm’s on-device AI initiative, the company is already pushing the boundaries. The parameter limit, currently between 10-13 billion, is progressing toward 20 billion or more as Qualcomm develops new chip iterations. Malladi noted, “These are highly sophisticated models, and the use cases built upon them are quite impressive.”

Furthermore, he pointed out that when considering applications such as Advanced Driver Assistance Systems (ADAS) that process data from various sources, including cameras, IR sensors, radar, lidar, and voice within vehicles, the model’s size becomes substantial, reaching between 30 to 60 billion parameters. Qualcomm envisions that on-device models could eventually approach or exceed 100 billion parameters.